After looking at methods of extracting a base frequency from a live audio input, I found two possible solutions. One is a processing library called Ess and the other is a pure-data object called fiddle~. After playing around with the two of them, I decided to use fiddle since it easily spits out the data I need without having to build a cludge of additional methods that Ess would require to extract what I want.

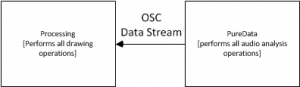

This decision makes things interesting since although audio decomposition is easy in puredata, visuals [especially drawing] are exceptionally difficult so I would opt for using processing. Since I think that things would be easier to develop if I split the functionality between two separate programming frameworks, I’m going to need some method of passing data between them. SInce that’s the case, I’m going to use Open Sound Control [OSC].

OSC used UDP to pass formatted messages asynchronously from one system to another. The advantage to using this system over say serial comms or straight TCP sockets is that both processing and PD have solid OSC implementations and tutorials to ease newbies into the process.

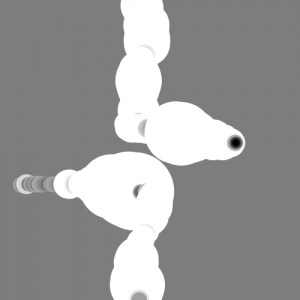

Using OSC, the final project will look something like the diagram above with the two programs running completely separate from each other aside from the PureData audio info being passed to processing.

Audio Extraction

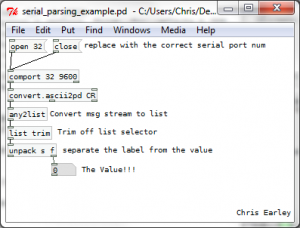

Taking everything I had decided and researched, I jumped in to throwing together a PD patch and after a few false starts and some reading of object help patches, I came up with this:

The patch takes in live input from any setup mic port and runs it through fiddle~. Fiddle~ then outputs a two value packet that contains the loudest frequency and its amplitude. From there I filter and map the values so that my job will be easier in processing. Lastly, I re-pack them and send out an OSC message formatted on the label “/sound”. Just playing around with it looks promising, you can dependably control the value to do what you want after some experimentation.

Visual Generation

Since I now have two values that I can play with, I can start thinking about how to apply them. Since I’m an avid processing user, I decided to reuse an old class of mine that simulates a “dot” that has an angle and linear velocity. I did this since the class structure already had all the update,draw, and setup methods defined from an older project.

Now all I have to do is map the values streaming in from PD to dot attributes and fidget around till get something I like. Using the OSC parsing example on the OSCP5 library site, I took their structure and created this function:

if (theOscMessage.checkAddrPattern("/sound")==true) {

if (theOscMessage.checkTypetag("ff")) { // float float format

/* parse theOscMessage and extract the values */

float freq = theOscMessage.get(0).floatValue();

float amp = theOscMessage.get(1).floatValue();

println("sound: " + freq + ", " + amp);

voiceDot.updateDotVelocities(freq, amp);

return;

}

}

Every time a new OSC message is received by the program, a method named oscEvent() is called and the above code is executed. As you can see, all it does is check for the correct address and type format and if everything is go, rip out the juicy data and use it to update the dot object. In the updateDotVelodities() method the private angle and linear velocity fields are set using scaled versions of the frequency and amplitude.

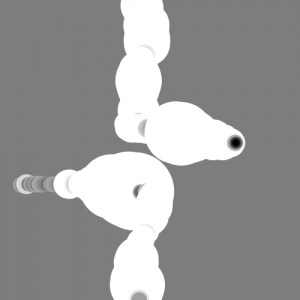

So here’s what the system generates right now:

It looks pretty boring so lets go change how the dot object is drawn and make things a bit more interesting.

For any processing primitive [in this case an ellipse] there are a few things you can control: fill color, size, stroke color, opacity, etc. This time around, I’m going to focus on color and size. Since what I am looking for is a bit more variety in color and the size of the dot itself.

After playing around for a bit I have settled on this for the dot draw method:

void drawDot() {

fill(this.linearVel*100);

ellipse(this.x, this.y, this.linearVel*10+10, this.linearVel*10+10);

}

All that’s happening is that the linear velocity [which is really the amplitude value scaled] is now in control of the fill color and as the amplitude spikes, the size of the dot will expand. The best way to understand what this means is to see a sample output image:

Much cooler. And with that I am done!

Here’s a quick demo of the application: [be sure to mind your speakers for this one]

And for those interested in my work, here’s the source: voice_painter [Be sure to read the README before using]